Claude Opus 4.1 vs ChatGPT-5: The Ultimate Face-off (2025 Guide)

Introduction: The Battle for the Best AI Coding Assistant in 2025

In today’s fast-evolving tech world, the question facing developers and enterprises isn’t whether to use an AI coding assistant—it’s, which one reigns supreme? Enter the titans: Claude Opus 4.1 by Anthropic and OpenAI’s ChatGPT-5. Both promise groundbreaking improvements in software engineering, but their approaches—and results—differ in crucial ways.

This blog post breaks down the latest benchmarks, features, and workflows. Whether you’re searching for “best AI for code generation 2025”, “SWE-bench model comparison”, or want to know which AI automates workflow better, get ready for a thorough, actionable, and comparison.

To get the most out of AI coding assistants like Claude and GPT-5, our Ultimate AI Prompt Guide offers expert tips and prompts—an invaluable resource for developers aiming to boost their workflow

What Are Claude Opus 4.1 and ChatGPT-5?

Claude Opus vs GPT-5 coding, AI software developer tools, generative AI code comparison, best coding assistant model 2025.

- Claude Opus 4.1: Anthropic’s flagship AI, optimized for enterprise-level codebase refactoring, advanced debugging, and robust agentic workflows. Integrated into platforms like GitHub Copilot and Amazon Bedrock, it prioritizes precision and reliability for both large and small teams.

- ChatGPT-5: OpenAI’s 2025 powerhouse, blending text, code, image, audio, and video inputs—all inside a single, unified model. Its massive 1 million token context window makes it an excellent fit for complex projects, system migrations, and multimodal development environments.

Why Search Intent Matters Here

If you’re a developer, CTO, or AI enthusiast searching for “SWE-bench coding benchmarks” or “AI coding agent for automation”, your intent is likely comparative: Which tool helps me write better code, faster and with fewer bugs? We designed this post to answer all those related queries in one, in-depth resource.

Coding Benchmarks: Claude Opus 4.1 vs ChatGPT-5

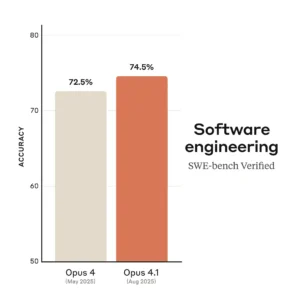

SWE-bench Verified Results (2025)

| Model | SWE-bench Verified* | Context Window | Strengths |

|---|---|---|---|

| Claude Opus 4.1 | 74.5% | 200,000 tokens | Multi-file fixes, reliable debugging, minimal hallucination |

| GPT-5 | Data Pending (expected >61%) | Up to 1,000,000 tokens | Multimodal tasks, persistent memory, workflow automation |

SWE-bench Verified is the gold standard for Python code fix accuracy—higher is better.

Real-World Takeaways

Claude Opus 4.1 beats competitors with a record SWE-bench Verified score, especially in multi-file Python workflows—delivering fewer “hallucinations” (incorrect results) and more reliable, production-ready code.

GPT-5 isn’t far behind: its anticipated high score and unique ability to “understand” enormous codebases (even complete project histories) signal a big leap forward for agent-based, multimodal software development.

In-Depth Feature Comparison

Claude Opus 4.1: Coding Powerhouse

- Precision & Reliability: Market leader for automated code refactoring, bug isolation, and fixing critical issues in production.

- Context Memory: Handles up to 200,000 tokens, allowing it to “see” your entire codebase or extensive documentation.

- Enterprise Integrations: Best-in-class integration with developer tools (GitHub, Amazon, Google Cloud).

- Agent Workflows: Excels at autonomous pull request reviews, code suggestions, and batch processing.

ChatGPT-5: The Unified AI Engine

- Massive Contextual Awareness: One million tokens unlocks truly large-scale migrations and documentation processing (think: months of project history loaded at once).

- Multimodal Inputs: Processes and generates text, code, images, audio, video—all in one model. Imagine resolving a bug with code, screenshots, and voice notes, effortlessly.

- Persistent Memory: Remembers your preferences and project context long-term, reducing prompt fatigue.

- Agent Orchestration: Advances in chaining tasks together (e.g., debugging → writing tests → deployment).

Pricing Snapshot

- Claude Opus 4.1: Transparent pricing ($15/million input tokens, $75/million output tokens).

- GPT-5: Pricing details not fully released for 2025; typically competitive but varies by volume and integration.

How to Choose: Real-World Use Cases and Automation

When to Use Claude Opus 4.1

- You need the highest-verifiable Python code accuracy (based on SWE-bench).

- Your workflows demand multi-file refactoring and bulletproof bug fixes.

- Automation of code reviews and batch processing are mission-critical.

- Predictable pricing and enterprise cloud integration matter.

When to Use GPT-5

- Your projects require multimodal input and output (e.g., combining code, diagrams, and meeting transcripts).

- You work with enormous codebases or are implementing full-system migrations.

- You want persistent project memory and have long-term automation goals.

- Early adopter of AI agentic features and workflow chaining.

- Claude Opus 4.1 benchmark results for developers

- GPT-5 vs Claude Opus 4.1 for software engineers

- SWE-bench Verified best coding models 2025

- Enterprise AI coding assistants comparison

- Code generation AI agent integration tips

Pro Tip: For many organizations, using both models in tandem (Claude for code generation, GPT-5 for multimodal tasks) yields the best results.

CLAUDE OPUS 4.1 VS GPT-5: Comparison at a Glance

| Feature | Claude Opus 4.1 | GPT-5 (OpenAI) |

|---|---|---|

| Accuracy (SWE-bench) | 74.5% | Expected 61%+ |

| Max Context Window | 200,000 tokens | 1,000,000 tokens |

| Multimodal Capabilities | Text, code | Text, image, audio, video, code |

| Integration | GitHub, Amazon Bedrock | IDEs, cloud platforms, All-in-one |

| Agent/Autonomous Workflow | Production-proven | Advanced, in-beta |

| Pricing (as of August 2025) | Transparent | To be announced |

Actionable Tips for Implementing AI Coding Assistants

- Use AI model strengths: Delegate precision Python fixes to Claude Opus 4.1; leverage GPT-5 for complex, multimedia coding problems.

- Integrate for automation: Automate Pull Requests, test writing, and bug triage with agent workflows.

- Optimize developer time: Embed in CI/CD pipelines for continuous improvement and fewer regressions.

To enhance your experience with ChatGPT-4o and other AI models, consider exploring our Ultimate AI Prompt Guide—a comprehensive prompt book designed to help you master effective AI interactions and get the best results.

Explore more insights, best practices, and tutorials in our AI development blog and get started with DigeHub’s AI integration services.

OpenAI has also adjusted its offering by bringing back GPT-4o following backlash to GPT-5’s deployment, alongside plans for continual improvements as promised by CEO Sam Altman. For details, read the latest blog on this development: ChatGPT-4o Returns After GPT-5 Backlash — Sam Altman Promises More Upgrades.

Frequently Asked Questions

Q1: Is Claude Opus 4.1 better than GPT-5 for coding?

Currently, Claude Opus 4.1 performs best on Python benchmarks like SWE-bench, especially for multi-file debugging and codebase refactoring. However, GPT-5 is a strong contender for multimodal and persistent memory use cases.

Q2: Can both Claude and GPT-5 be integrated into my developer workflow?

Yes! Many companies use Claude for precise code fixes and GPT-5 for agentic tasks, documentation, and projects that benefit from multimodal input.

Q3: Which model is more affordable for enterprise use?

Claude Opus 4.1 has clear, published pricing as of 2025. GPT-5’s pricing is expected to be competitive but varies based on usage.

Q4: What are the best use cases for Claude Opus 4.1?

Automated PR reviews, multi-file bug fixes, robust enterprise debugging, and large codebase refactoring.

Q5: How do I choose the right AI coding assistant for my company?

Audit your workflow: If you need coding accuracy, choose Claude. For multimodal inputs or agentic automation, pilot GPT-5. Use both for maximum productivity.

For developers and enterprise teams aiming to accelerate software delivery in 2025, the choice is clear: Claude Opus 4.1 leads in SWE-bench Verified accuracy, while GPT-5 offers game-changing context and multimodal abilities. Maximize value by integrating both where they shine and consult with experts for a custom implementation.

Ready to power up your developer workflow with next-gen AI?

Visit DigeHub now for expert guides, integration services, and the latest on AI-driven software engineering.

Want more insights? Explore our blog category for hands-on AI code generation guides and workflow automation tips!